A Local AI Model Setup for Apple Macs

In this post we’ll cover how to get multiple machine learning models running on an Apple Mac using Ollama for model management, open-webui for interactive chat, and stable-diffusion-webui for image generation.

Prerequisites

We’re going to cover installing Oh My Zsh and Homebrew, then we’re going to install Python and Node.js which we’ll need to run open-webui, web app for using the models. I recommend always, always using a version manager for those last two, especially on a Mac. For Node there’s nvm and for Python there’s, let’s just say, plenty of options, and the one we’ll use is pyenv. Both let you install and switch between multiple versions of Node and Python as well as set up specific dedicated environments. Finally we’re going to install git so we can download the web app. This is for completeness—I imagine plenty of you will have these setup already, if so skip ahead to the next section on Ollama.

Open the Terminal app and run this command from the Homebrew site:

$ /bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"and follow the installation instructions to make the brew command available on the command line.

From the Oh My Zsh site, we’ll get a corresponding installation command we can run to install its flavor of the Zsh shell. Now, you doin’t strictly need Oh My Zsh or Zsh, any shell will do, but we’ll cover it for completeness since some of the instructions will be specific to it and the zsh shell.

$ sh -c "$(curl -fsSL https://raw.githubusercontent.com/ohmyzsh/ohmyzsh/master/tools/install.sh)"and follow the installation instructions.

For pyenv, we can run the following from the command line:

$ brew update

$ brew install pyenvThen follow the rest of the post-installation steps, including setting up your shell environment for pyenv. The site has instructions for your shell, here’s the commands for Zsh (which the installer will also tell you about):

$ echo 'export PYENV_ROOT="$HOME/.pyenv"' >> ~/.zshrc

$ echo '[[ -d $PYENV_ROOT/bin ]] && export PATH="$PYENV_ROOT/bin:$PATH"' >> ~/.zshrc

$ echo 'eval "$(pyenv init -)"' >> ~/.zshrc

$ exec "$SHELL"

$ pyenv help # if this works, you're setFor this setup we’ll installed Python 3.11.9, the highest available version of 3.11 at the time of writing and which works with open-webui:

$ pyenv install 3.11.9

$ pyenv install -l # will show 3.11.9 is availableFor managing Node installations, we can install nvm using Homebrew:

$ brew update

$ brew install nvm

$ nvm help # if this works, you're setand then we’ll use the current long term support (‘lts’) version of Node.

$ nvm install --ltsWe’ll also want Git, to install some projects, and can install it with Homebrew:

$ brew update

$ brew install gitWith those prerequisites in place, onto the fun stuff.

Installing Ollama

Ollama is an incredible open source project that lets you install and manage lots of different lange language models (LLMs) locally on your Mac. You can download an installer from the Ollama web site unzip with a double click, drag it to your Applications folder, and run it. It will appear on the taskbar, be added to the command line, and going to http://localhost:11434/ will let you know it’s running. The project supports an impressive library of current models. Let’s add the phi3 and llama2:7b models using the command line:

$ ollama pull phi3

$ ollama pull llama3:7bAnd we can run them to create a chat window in the command line:

$ ollama run phi3And that’s it. You now have a world of AI at your finger tips. I should mention that you can access Ollama programmatically via an api, which is how it integrates under the hood with open-webui, but for this post, we’ll keep our focus on a browser based experience.

Installing open-webui

We have LLMs running locally. Let’s get a front end for them via open-webui, another open source project that provides a really impressive and easy to use web app for your browser.

Go to https://github.com/open-webui/open-webui, grab the repo name and clone it with git. We’ll use a folder called projects, but you can keep these anywhere

$ cd ~

$ mkdir projects # or where ever you like to keep code

$ cd projects

$

$ git clone git@github.com:open-webui/open-webui.git

$ cd open-webuiTo run open-webui we’ll need a Node based frontend and a Python based backend. To set these up we’ll use the nvm and pyenv environment managers. Let’s start with the Python environment using the 3.11.9 version we set up earlier to create an environment, activate it, and install the projects’ requirements into that environment:

# create then activate the enviroment

$ pyenv virtualenv 3.11.9 open-webui

$ pyenv activate open-webui

# go to the backend folder and set it up

$ cd ~/projects/open-webui

$ cd backend

$ pip install -r requirements.txt -UTo get our website into a browser, we’ll also need to setup a Node frontend. From the main project folder, tell nvm to use the long term version we added earlier, install the dependencies, and build the front end:

$ cd ~/projects/open-webui

$ nvm use --lts

$ npm install

$ npm run buildOnce it’s done you can start the web app from the backend folder:

$ cd ~/projects/open-webui

$ cd backend

$

$ ./start.sh(2024-06-18) if you get an error like this:` ModuleNotFoundError: No module named 'tenacity.asyncio'` you can fix it by downgrading tenacity using pip, and then retrying start.

$ cd ~/projects/open-webui

$ cd backend

$ pip install tenacity==8.3.0

$ ./start.shUsing open-webui

We can go to http://localhost:8080 where we’ll be asked to create an account and sign in. Now, this is entirely local to your setup, you are not creating an online account. The open-webui project is comprehensive and designed to be used in team setups, but to emphasise, the account setup here is entirely local to you, so feel free to add any (can be fake) email and password you want. After that, we should be up and running.

oi home

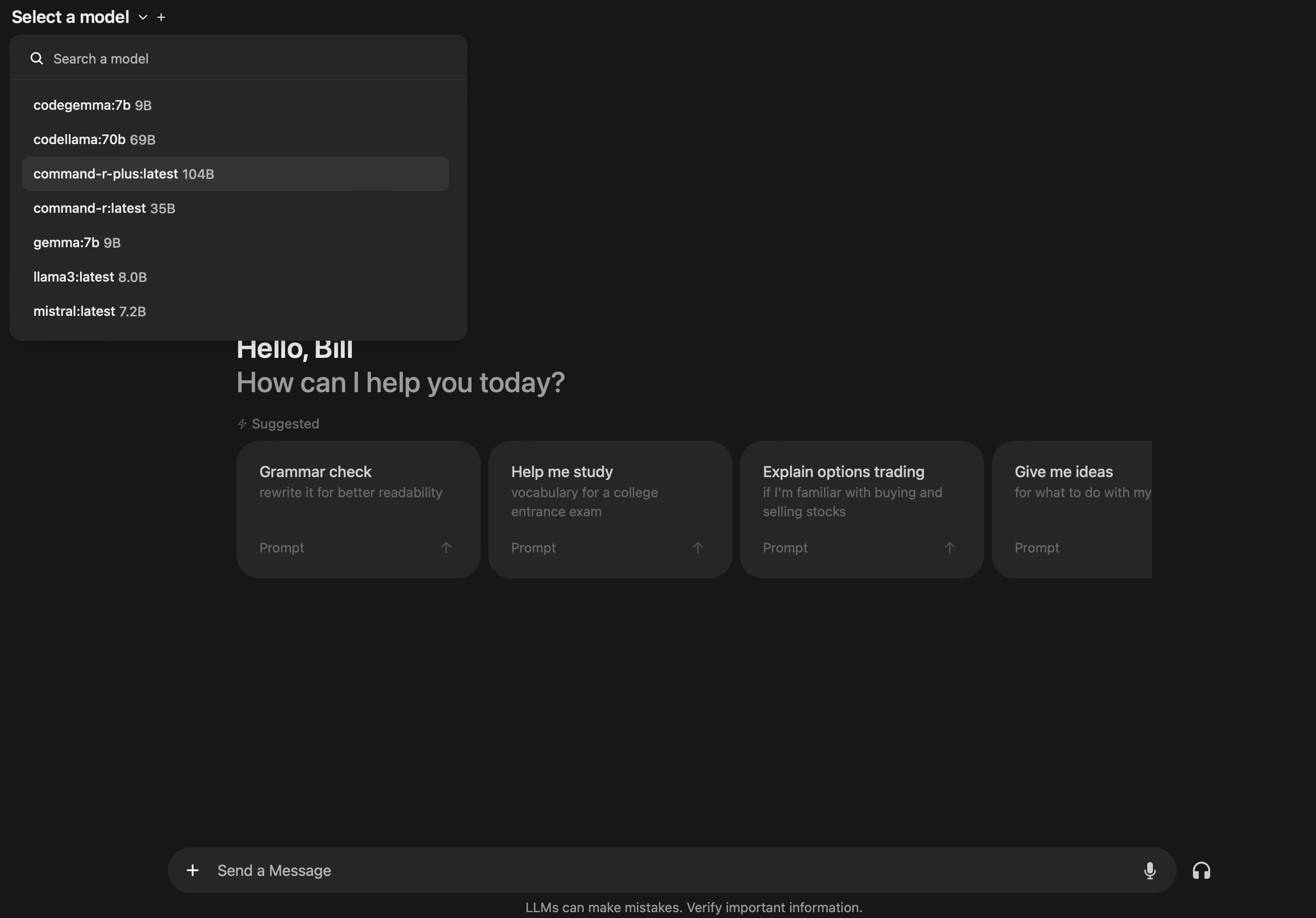

Fancy! Now we have a slick interactive web app we can use with multiple freely available AI models. To get going let’s first tell open-webui where ollama is running in via settings/admin/models. The defaults here will be the same as the Ollama installation, so we can just go ahead and confirm the setup.

Now we can can click on new chat, select one the models and start a session.

As mentioned, open-webui is comprehensive, with plenty of settings and options. If you’re used to using apps like ChatGPT or Perplexity the interface will be familiar. In the chat window you can have responses read aloud, have them regenerated, edit chat text, send like/dislike feedback to the model, and see performance details like response times and token sizes. It’s really quite an impressive app.

oi session taskbar

The workspace area in particular is worth mention. You can save your prompts, create system prompts, download community prompts (you will need a separate online account for that), view available models, add documents for analysis and install extension tools. Again, impressive.

oi admin prompts

You can even use the app to tell ollama to download other models with one caveat I’ve found that the larger models can time out via the browser—if that happens you can pull them from the ollama command line instead and they’ll be picked up by open-webui. The open-webui community also creates their own models (eg using fine tuning), akin to plugin gpts.

oi admin models

And you can ask open-web to generate images. Let’s talk about that.

One More Thing: Image Generation with Stable Diffusion

To get image generation support into open-webui, we can clone AUTOMATIC1111’s stable-diffusion-webui project:

$ cd ~/projects

$

$ git clone git@github.com:AUTOMATIC1111/stable-diffusion-webui.gitSimilar to open-webui, we’ll create and activate a dedicated Python environment for it, separate to open-webui’s. This allows both projects to have dedicated non-overlapping setups that don’t trample on each other. Using *a different shell* to the one for open-webui:

$ pyenv virtualenv 3.11.9 stable-diffusion-webui

$ pyenv activate stable-diffusion-webui

$

$ cd ~/projects/stable-diffusion-webui

$ pip install -r requirements.txt -UAnd then we can run the project, with the --api argument. to make the api available to open-webui:

$ cd ~/projects/stable-diffusion-webui

$

$ ./webui.sh --apiThis will also download a default model (‘v1-5-pruned-emaonly’). Like open-webui, you’ll get a fully fledged web app, this time at http://localhost:7860/.

stable-diffusion-webui home

You can install multiple image generation models and configure them (eg from http://civitai.com) and there’s a plethora of configuration options, that are especially useful if you want to mainly working with image generation. For our purposes we’ll leave everything at default.

Go back to open-webui and this time go to admin/settings/images:

oi admin images

It will have the defaults built in just like it had for Ollama, so go ahead and confirm them. And now you can generate images from any LLM response:

oi taskbar image generation

In Conclusion

And there we have it.

First off, a local setup is a great compliment to commercial cloud based offerings like ChatGPT and Perplexity. One particularly cool feature of open-webui is being able to switch models in a single chat session. This is great for understanding what the different models can do and their nuances. It’s fun for example to send models the output of others models and ask for opinions. More notable perhaps, these models do seem to act differently and seem to better at certain tasks than others. The code specialized models for example, are going to be better at software than their general counterparts (most of the time :). I get the long term idea behind generality of foundation models, but for the time being I feel like there will be more than one and more than one worth using. And of course, everything is running locally, so no concerns needed about what you’re sending to the cloud.

The models also represent different ‘needs and speeds’ for users. Microsoft’s phi3 for example is pretty quick at 3b parameters, and good when you want something simpler with more responsiveness; llama3:8b is something similar at 8b parameters. These will run decently on any M1 or beyond Apple Silicon. The larger models can go up to ‘cup of coffee’ levels of interaction times but with a corresponding depth of quality. The Command-R (35b params) and especially Command-R+ models (104b params), both from Cohere, both supporting 10 languages and optimised for company/enterprise use cases are not exactly quick. You’ll need a Mac with enough RAM to actually load up something like Command-R+. But qualitatively they do well on ‘thinkier’ or more analytical tasks where you want to go beyond everyday carries like improve this email, versus say having a conversation about a long form document, a paper, or a spreadsheet, or significant drafting/editorial work. Overall I’ve found having very easy access to multiple models is helping me build intuition and muscle on how to get the most out of them in general, a bonus on top of being able to pick and choose for task/responsiveness optima.

The final word should go to the open source projects. The models, they just want to learn, and keep improving at a rapid clip. The Ollama project does an utterly fantastic job of quantizing these models and scrunching them down to run on consumer hardware, so users can stay close to the frontier of what these things can do, and learn an important new tool. Both open-webui and stable-diffusion-webui are extraordinarily high quality user interface projects in their own right. easily exceeding most of what you’d see built by companies in-house, and to some extent more comparative to the UX designs offering byChatGPT and Perplexity, high quality products in their own right.