Machine Learning for Engineers: Book List 2024

At the end of 2019, I posted a book list for software engineers new to machine learning grouped into four areas: Machine Learning & Algorithms, Tools & Frameworks, Data Science & Analysis, and Companion Mathematics. This post provides an updated book list using the same groups. The previous list covered 26 books, this iteration is more concise, covering 11. This time around 3 are new, and 3 have seen updated editions in the interim.

Working with others and in my own work I’ve found it useful to organise the books into four broad areas—machine learning & algorithms, mathematics & statistics, data science & analysis, and, tools & frameworks. This allows diving in on what’s interesting and motivating, rather than climbing a ladder.

As an engineer, you are going to be working with libraries and frameworks for the most part, but ideally there’s a grasp of the ideas underlying machine learning, so we'll start there before looking at tools. Ideally you'll develop intuition and background on data science and mathematics in time, so we'll end on those two. That said, please do look around for other options if these don't seem right for you! Some books click for people in ways others don't—for example I'm more or less math impaired, but Kuldeep Singh's book on linear algebra worked well for me once I found it and has allowed me to access other books and material. And if you just want the list, there’s a sheet with the books.

Machine Learning & Algorithms

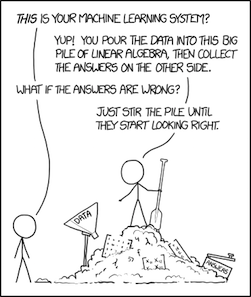

xkcd: Machine Learning

The Hundred-Page Machine Learning Book, Andriy Burkov. Burkov makes a good opening decision, to catalog only the techniques that have been seen to work well, such as neural networks, trees, and the main classifier and clustering approaches. So while you won’t get a complete overview of the field, what’s here is relevant to practice. The chapter “Basic Practices” is a particularly good overview of real world things that need to happen and get done to obtain useful predictions, such as feature engineering, regularisation, hyper-parameter tuning, and how to assess performance.

Understanding Deep Learning (UDL) by Simon J.D. Prince . Is not a light book by any means, the author describes it as graduate level, but I’d argue it’s more accessible than rightly well-regarded texts that came before it (it has displaced Deep Learning for me as the default in-depth treatment). This is an extremely well written and well organised text with an excellent companion site. Chapters 2-7, coming in at around 100 pages, I might imagine is the best introduction to neural networks in print, certainly the best I have read. The math is ramped and presented alongside visual representations, very welcome for non-math types like myself. Definitely rewarding to anyone willing to spend time working through it—the 'toy example' of back propagation in chapter 7 for example is worth time spent with a pen and paper as to how these things learn. Chapter 12 covers transformers, and if you've read What Is ChatGPT Doing … and Why Does It Work? , and wanted to dig a bit more, this is a great next best thing to read.

Foundations of Deep Reinforcement Learning, Laura Graesser, Wah Loon Keng. This covers a lot of ground :) Like UDL has for deep learning, it has become the default introduction for reinforcement learning for me, replacing Sutton & Barto , and its mix of code, algorithms and math is very welcome. Why pick reinforcement learning for a book? It's important to real world LLM usage ,via techniques such as reinforcement learning from human feedback (RLHF). As well that, reinforcement learning has a pretty broad range including experimentation (bandits are a simple/special-case form of reinforcement learning, kind of, and a general form of A/B testing, kind of). In that sense you get a lot of functional leverage as an engineer by having a grasp of the topic. Personally though, I find reinforcement learning fascinating. Even OpenAI way back started out with reinforcement learning and released the Gym framework (which is used in this book).

Tools & Frameworks

xkcd: Python

When compiling the list in 2019, I mentioned there was an issue of it becoming "Machine Learning For Python Engineers". In 2024, Python remains the dominant language for applied machine learning and data science for production applications, and this is unlikely to change. While many frameworks under the hood are using native C/C++ , Python is the medium through which they're used, making it the most useful language for developing an understanding of machine learning applications. A good example of Python’s durability in the space is An Introduction to Statistical Learning from the Data Science & Analysis section, seeing a 2023 publication based Python rather than the original’s R.

Hands-On Machine Learning with Scikit-Learn and TensorFlow, 3rd Edition, by Aurélien Géron. The first half of this book covers common supervised approaches - classification, regression, support vector machines, trees and forests - using scikit-learn. The second half covers neural networks and deep learning with TensorFlow. There are two things Hands-On Machine Learning does well. First instead of trying to cover the gamut of techniques, it focuses on the ones that tend to get used. Second is the overview throughout the book on important gotchas—chapter 3 briefly covers performance measurement, chapter 2 discusses data preparation and chapter 8 is given over entirely to dimensionality reduction. If you're coming at machine learning from a software background, these are good to be aware of.

Deep Learning for Coders with fastai and PyTorch, by Jeremy Howard, and Sylvain Gugger. The book’s examples are written in Jupyter notebooks all available online, and on a computer at least, the Kindle edition renders really well. Apart from being a useful overview of both PyTorch and Jupyter, this is a good book for non-specialist software engineers to get started with deep learning. It’s clear the book is written by people who teach, per their stellar fast.ai course. The book as a result is well structured, introducing concepts in sound order with each chapter ending with a good set of questions for revision/retention. For example, training is split across introductory and more advanced chapters (the training introduction in chapter 4 is as an accessible an overview as you’re likely to find). There is content on image processing, latent spaces, natural language processing and text classification. And despite the title there’s a neat overview of random forests. The back half covers ML formulae written out in Python, which is going to be intuitive to programmers. One thing I really appreciated throughout the text were the references to academic and breakthrough papers. It’s also nice to see a really good book covering PyTorch.

Designing Machine Learning Systems: An Iterative Process for Production-Ready Applications by Chip Huyen. This is a great book, for me, best in its category. There's a lot of content online about production ML (or 'MLOps') but it's not particularly usable or useful compared to what you can find on DevOps or SRE (it's complicated! models fail differently! our product can help!). In many respects, we don't seem to have moved the discourse much past the original THICCOTD paper (and that's going on 10 years old at this point). Nguyen's book changes that as a eference level text on deploying and maintaining production grade machine learning models, as well as a strong overview of the development process. Of all the books in this list, it might be the best starting point for an engineer who's completely new to ML, in the sense you could branch out from this book into any of the other books mentioned here. Another audience that can benefit from this book are researchers and scientists who are expert in ML itself but unfamiliar with software product development and operations. The book emphasises principles and systems as well as tooling/operations, so I expect it will have a good have shelf life. I also really appreciated its focus on online and low latency usecases that are increasingly important to internet enabled businesses, as well as identifying system and process boundaries between researchers and engineers.

Data Science & Analysis

xkcd: Correlation

The Data Science Design Manual, by Steven S. Skiena. Well known for his immensely popular book on algorithms, Skiena is a lecturer and a teacher and it shows. The book is designed as an undergrad or early graduate level introduction to the field. It’s well organised without being donnish and has motivating examples throughout. It’s also thorough, covering topics such as math basics, statistics, data preparation, visualisation, regression, ranking. clustering and classification. There's also has a comprehensive companion web site with online lectures.

An Introduction to Statistical Learning: with Applications in Python (ISL) by Gareth James, Trevor Hastie, Robert Tibshirani, Daniela Witten, and Jonathan Taylor. This is a great introduction to learning techniques and approaches to selecting and evaluating them. The book self-describes itself as a less formal and more accessible version of Elements of Statistical Learning. For a working engineer, using Python is ideal (the first version used R, a choice reflecting the book's origins in statistics rather than software). The examples are well done, and there's more on the companion website.

Companion Mathematics

xkcd: Purity

Work can get done in applied machine learning without mathematics. It is useful though for developing intuition on what these systems are doing, helps with working through the introductory books, and is needed for the more advanced books (which we don’t cover here) and often, key papers. For what it's worth, when it comes to applied machine learning, it never feels like time spent on foundational/math side is wasted time. With that in mind, some good topics to know are, linear algebra, statistics and regression, and probability. On to the books:

A Programmer's Introduction to Mathematics, by Jeremy Kun. Starts with polynomials and sets, thengoes on to cover areas like graphs, linear algebra and calculus. The book does a good job of starting from a programmer’s frame of reference, then shifting toward what mathematicians are more used to, providing progressively detailed explanations and mostly avoids big conceptual jumps. Very much a book worth spending time with along with a pen and paper and might just (re)kindle an interest in math for you, if it’s not already your thing.

Linear Algebra: Step by Step, by Kuldeep Singh. There are a lot of linear algebra books and this one I really like as a companion text and a self-study catchup, especially if it’s been a while since you’ve "done math". As an added bonus it formats well on Kindle. Linear algebra is everywhere in machine learning and you’ll see it even in basic material, since a lot of real world machine (and especially deep) learning involves matrix swizzling. Whatever it is about this book that makes the topic accessible to me I’m not sure, but I wish there was a Kuldeep Singh book for every branch of math.

Probability: For the Enthusiastic Beginner, by David Morin. This is a high level, and well written overview of probability. It's a real gem and pretty accessible. Probability becomes useful as machine learning is so often dealing with real world uncertainty, and machine learning results are often expressed as likelihoods.

Conclusion

A fair argument, one I've mentioned in the previous post is that the machine learning application space is moving quickly, too quickly for books. Especially when it comes to programming, books are inevitably centred around frameworks, and so are much more likely to get outdated versus online media. I think this is true, but I believe the books in this list are fairly stable and hold up well (even ones like Deep Learning for Coders with fastai and PyTorch, which is nearly half a decade old). In any case, the suggestion from the introduction stands—do look around for other options if these don't seem like the right ones for you.